Designing Reliable Navigation Behaviors for Autonomous Agents in Partially Observable Grid-world Environments

2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 2024

Abstract

Deciding where to go is one of the primary challenges in designing an agent that can explore an unknown environment. Grid-worlds provide a flexible framework for representing different variations of this problem, allowing for various types of goals and constraints. Typically, agents move one cell at a time, gathering new information at each time step. However, recomputing a new action after each step can lead to unintended behaviors, such as indecision and forgetting about previous goals. To mitigate this, we define a set of persistent feature layers that can be used by either a linear weighted policy or a neural network approach to identify potential destination locations. The outputs of these policies are processed using knowledge of the environment to ensure that objectives are met in a timely and effective manner. We demonstrate how to train and evaluate a U-Net model in a custom grid-world environment and provide guidance and suggestions for how to use this approach to build complex agent behaviors.

Media

The agent gets a set of binary feature layers as a local observation.

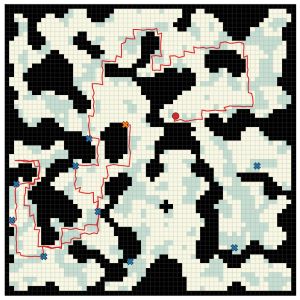

As the agent moves, the local observations are aggregated into persistent feature layers.

Exploration strategy.

Balanced strategy.

Exploitation strategy.

Solving the target detection problem using a neural network policy. Example 1.

Solving the target detection problem using a neural network policy. Example 2.