Explainable LLM-Based Drone Autonomy Derived from Partially Observable Geospatial Data

Proc. SPIE 13461, Geospatial Informatics XV, 2025

Abstract

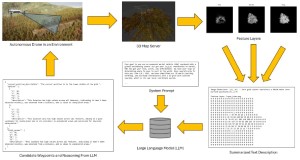

Human teams are increasingly required to collaborate with machines (e.g., drones) in contexts that lack clearly defined formal rules for their interactions. These domains often demand that humans understand machine decisions and enable bidirectional communication to achieve optimal teamwork. In this work, we explore geospatial human-AI teaming where the AI constructs an uncertain partially observable spatio-temporal representation of its environment. We demonstrate how large language models (LLMs) can facilitate explainable autonomy, with our agent using an LLM for planning and navigation. The agent's decisions are based on summarized data layers from a probabilistic occupancy voxel map, which include factors such as elevation, exploration fringe, observation distance, and time since last observed. These layers, combined with any domain-specific directives, guide the agent's decision-making process. In addition to path planning, users can interact with the agent at any time to understand or alter its course of action. While this framework is demonstrated in a simulated environment to control variables and access known ground truth, it is designed to be transferable to real-world applications. We present several scenarios of increasing complexity, starting with a simple proof of concept using one-shot actions on a single feature layer, then adding multiple conflicting features with different plausible outcomes, and ending with a multi-step real-time simulation in a full 3D rendered environment.